Issue 6: Where AI Economics Meets Your Wallet

Trying to make decisions on AI subscription plans? This one is for you.

Hello there! The past two weeks have been a roller coaster in the world of AI. What’s new with that, we can hear you saying. Well, the discussions for and against AI get more polarising by the minute, so let’s get to the meat quickly.

Note: This edition of Al UnGeeked features only three sections — News, Trends, and Al in Practice. We are experimenting with a tighter format to make the newsletter more digestible.

News That Matters

The illusion of thinking - Apple’s research paper on AI

Last week, Apple dropped a bomb into the AI world, with a research paper that questioned the efficacy and ‘reasoning’ capabilities of LRMs (Large Reasoning Models). Apple claims that as complexity of tasks increases, the model breaks down. It cannot even solve the Tower of Hanoi puzzle (stacking same coloured rings on poles with the largest at the base), a problem diligent 7 year olds can solve, says the paper. Essentially, Apple claims that LRMs cannot do what a good algorithm can, solving advanced problems and winning at chess.

However, the story doesn't end there. The Illusion of the illusion of thinking, a rebuttal paper authored by no less than Claude Opus (LLM developed by Anthropic) plus some humans, attributes the findings of the Apple paper to flawed experimental designs rather than fundamental model constraints.

Do Apple’s claims have veracity? Or is the BigTech company just feeling FOMO on trailing in AI and hence trying to game the system? Questions galore.

Is an Apple-Perplexity matrimony on the cards?

While we are on the topic of Apple’s FOMO, there is news that Apple is potentially looking at acquiring Perplexity, a real-time conversational AI search engine most recently valued at $14Bn. Whether this ends up a buyout or a partnership integrating Perplexity into Apple’s Safari search engine is yet to be seen. Perplexity was last seen being courted by Meta, which ended up investing in Scale AI instead. More mergers and acquisitions are clearly yet to come in this space, sometimes for the tech, sometimes for the people, sometimes both.

One Trend of Note

Economics of AI: Getting Cheaper to Use, But Not Cheaper to Run

AI companies are in a race — one that’s as much about economics as it is about innovation.

Building large AI models is insanely expensive. Training a single model can cost over $100 million, and those costs keep rising as models get bigger and start attacking more complexity (remember we talked about the number of parameters?). Most of that spend goes into “compute” — the processing power needed not only to train these models but also to run them afterward.

Because, once trained (which requires massive compute already), the model has to run over and over again for every user query, chatbot response, or business task. This is called inference, and it also uses compute. While the cost of a single inference is falling, the total number of inferences is exploding — billions happen every day. As Amazon CEO Andy Jassy said, “training happens occasionally, but inference happens constantly.”

At scale, inference becomes a growing, ongoing cost that rises with usage. Even with better chips and smarter software, total costs keep going up. Why? Because cheaper usage drives more usage, which means more infrastructure, more cloud bills, and more pressure on AI companies to keep up.

And that’s just the compute side. Around it are expensive layers like research, data pipelines, engineering talent, and sales teams. Put together, the AI business model is a tough one to crack.

So even with booming revenue, the economics of AI are tricky. Take OpenAI, for example — it reportedly spent around $5 billion on compute alone in 2024, while bringing in an estimated $3.7 billion in revenue. And it’s not just OpenAI. BigTechs like Microsoft, Amazon, Google, and Meta have all seen their spending shoot up over the past 1-2 years. At the same time, their free cash flow margins dipped, showing just how much they’re pouring into scaling up their AI capabilities in the hopes of future monetisation.

So far, AI companies are trying everything from subscriptions and pay-per-use APIs to full-stack enterprise solutions and developer marketplaces to boost monetisation. But until margins improve and unit economics stabilise, the business of AI remains a high-stakes, capital-intensive game.

AI in Practice

Choosing The Right AI Subscription Plan

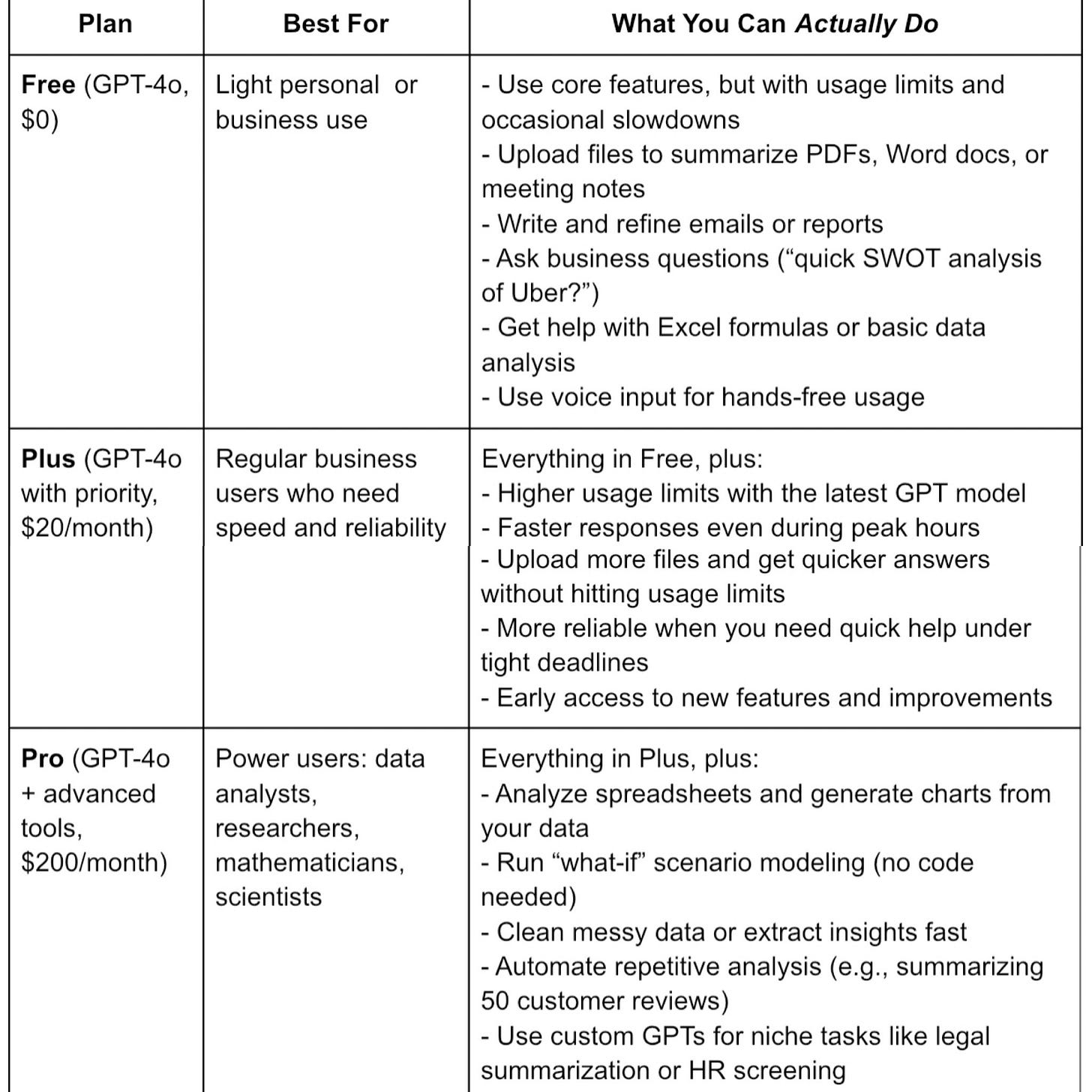

Among the various monetisation models AI companies are exploring, subscriptions are what you're most likely to run into first — so let’s start there. The explosion of AI apps has also brought a maze of subscription plans. To pay or not to pay, is the question. “It depends” is the cliched yet practical answer.

Let’s pick ChatGPT as an example.

Level 1: No-login free access

The simplest way to try ChatGPT. You can ask quick questions and get basic help, but there’s no memory, no voice or image support, and no access to the most up-to-date model. Think of it as a limited demo to get a feel for how it works.

Level 2: Login with free access.

A major step up. You get memory, customization, and limited access to GPT-4o, the flagship model that supports text, images, and voice. But when you cross usage limits or during high-traffic times, it may switch to GPT-3.5 (text-only). It’s great for tasks like writing emails, summarizing files, or doing quick analysis, but depending on the free version for more complex tasks might impact timelines.

Level 3: ChatGPT Plus at a subscription fee of $20 / INR 1,700 per month

With Plus, you get consistent, priority access to GPT-4o. That means faster responses, higher usage limits and no downgrades to GPT-3.5. While free users can access some advanced features, Plus ensures they’re available when you need them — especially helpful if AI usage has become integral to your work.

Level 4: ChatGPT Pro at a subscription fee of $200 / INR 17,000 per month

Designed for power users who need advanced features for data-driven insights, visualisations, automation etc. Pro also unlocks OpenAI’s o-series reasoning models (like o3 and o4-mini) for deeper, multi-step problem-solving, plus early access to new features and models. It is particularly useful for scientists, mathematicians, researchers, and anyone tackling complex workflows.

Here’s a handy tabular comparison of levels 2-4, if you’re still figuring out whether to stick with the free version or upgrade:

For teams and businesses, ChatGPT also offers group plans starting at $25–30 (₹2,100–2,500) per user per month. These come with shared memory, admin controls, a workspace where teams can collaborate more easily and advanced data privacy.

Google Gemini, the second most popular conversational AI app, also has a similar subscription model — Google AI Premium costs ₹1,950 (~$23/month). The bonus? You also get 2TB of Google Drive storage and deeper integration to the wider Google ecosystem.

Not sure which AI tool or plan is right for you? Just start using them! Try the free versions first. They’re surprisingly capable for everyday tasks. If you find them slowing you down or hitting limits, that’s your cue to upgrade. Paid plans offer better speed, reliability, and a few power features that can really help if you use AI regularly for work.

With that, we sign off on this week’s issue. What did you find useful? What did you miss? What more do you want us to do? Tell us, so we can steer accordingly.

Cheers!