Issue 7: Search Shakeups, Shadow AI, and NotebookLM

The Whats, How-Tos, and Impact of AI Usage

We’re back with another dose of AI UnGeeked. This issue has the usual mix - news you might have missed, a trend worth watching, and a hands-on tool to put AI to work. Let’s go.

News That Matters

News Sites Lose Traffic as Google Leans Into AI

Since Google launched AI Overviews for Search in May 2024, 37 of the top 50 news sites have seen year-over-year traffic declines. The feature gives users quick, AI-generated answers at the top of search results, so fewer people click through to the source of the news / information. According to SimilarWeb, Forbes and HuffPost each lost 40%, while DailyMail, CNN, and The Post saw double-digit drops too. Publishers say this threatens their ability to fund journalism and keep their business models alive.

Google has pushed back, saying Search is still sending more traffic overall to sites than it did a year ago, even if traffic to some news sites are down. It also says AI Overviews are usually suppressed for trending news, and that mobile app traffic is not captured by SimilarWeb.

But there’s more change coming. Google recently launched AI Mode for search, a chatbot-style tool designed to compete with ChatGPT. It responds in a more conversational format and shows even fewer links, which could put additional pressure on publishers. In response, outlets like The Atlantic and The Washington Post are focusing on direct reader relationships through subscriptions, newsletters and events to stay financially sustainable.

Early Legal Wins for AI Giants, but Copyright War Continues

Meta and Anthropic just got early wins in the AI copyright battles. Courts ruled it’s okay to train AI on copyrighted books, as long as the outputs aren’t direct copies. Anthropic was cleared for using legally bought books to train Claude, though it still faces trial over pirated ones. Meta’s case was dismissed since the authors couldn’t show real harm.

Meanwhile, Open AI and Microsoft are facing a new class-action suit, focusing on three nonfiction books. OpenAI is accused of using shadow libraries full of unlicensed content, while Microsoft is being pulled in for allegedly helping spread that material by integrating it into its products.

All of this points to a bigger unresolved question: What counts as fair use when AI models are trained on copyrighted content like books, music, news, or images?

The fight is still very much on.

One Trend of Note

Shadow AI: A Growing Risk Hiding in Plain Sight

Ever found a free AI tool that slashed a 2-hour task down to 10 minutes, but your company didn’t officially support it, so you accessed the tool with your personal account instead?

You’re not alone. Across industries, employees are increasingly using free, unauthorized AI tools to speed up or improve their work: summarizing reports, drafting documents, or analyzing data. This use of AI tools at work without official approval or oversight is known as Shadow AI (a natural extension of Shadow IT). The catch? Many employees don’t realize they might be exposing sensitive company data, creating a migraine for employers.

What’s the big deal?

Shadow AI may seem harmless, but it comes with serious risks. The moment someone pastes internal data into an external tool, it becomes a security concern. Free, unauthorised tools usually lack stronger encryption, ability to disable data retention, and audit trails if anything goes wrong - things that are present in enterprise-grade platforms / accounts.

That opens the door to data leaks, IP theft, and potential violations of international regulations like GDPR or HIPAA, or Indian ones like the DPDP Act. The result can be steep fines and / or reputational damage. No surprise that shadow AI was recently ranked among the top five global cybersecurity threats, with a severity score of 7.8 out of 10.

Real examples that could be happening at your workplace:

A strategy analyst pastes pricing info into ChatGPT to make a slide more readable, including confidential figures not meant to leave the company.

A product team uses Google Gemini to summarize consumer research, with prompts that include names, user quotes, and internal ratings from a private study.

An operations lead asks Claude to clean up a supply chain Excel output, unknowingly uploading confidential vendor data.

What you can do as an employee / manager?

You don’t need to stop using AI, but it’s important to use it carefully. If you’re using third-party unapproved tools for any reason, then:

Don’t paste anything confidential or sensitive in your AI prompts

Stick to low-risk tasks, like rewording public content, or writing rough drafts without confidential data

Assume anything you type could be stored. If it’s not safe to post publicly, don’t use it in an AI prompt.

If you manage a team, talk to them about what’s okay to use and share best practices. Most misuse happens when people simply don’t know better.

What should companies do?

Blocking AI use isn’t helpful or enough. Instead, companies should focus on guidance and safer alternatives:

AI usage is going to happen, accept and embrace it. Provide access to AI tools that come with enterprise-grade privacy protections.

Have a responsible-use AI policy and governance in place

Monitor AI tool usage to understand how employees are using them. Set clear, practical policies. For example, allowing chat-based use for general tasks but blocking upload of sensitive files.

Educate employees with specific examples of safe and unsafe use. Don’t just say “don’t share data”.

Shadow AI is already part of everyday work in many companies. The real risk is when it stays unseen and unmanaged. Companies need to step up by setting clear guidelines, offering approved tools, and helping employees use AI safely.

AI in Practice

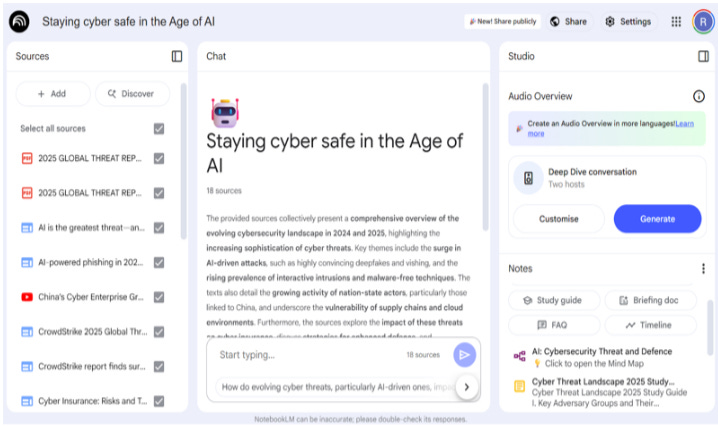

NotebookLM: Your Research, Only Faster

Ever wish ChatGPT or Google Gemini would just stick to the brief and stop making stuff up? Meet NotebookLM, Google’s AI-powered research assistant. Wait, another one? Didn't we cover one last time?

Yes, but this one’s different. It only works with sources you upload or approve to use. Give it PDFs, links, YouTube videos, or let it find sources you can sign-off. Every answer is built from those, and only those.

Just grounded, source-based responses. Hey presto, hallucinations begone!

Here’s how to get rolling:

When you open a Notebook, you’ll see three panels: Sources, Chat, and Notes (or Studio, if you’re trying the podcast feature - we’ll get to that)

Upload a PDF or toss in a mix of documents, links, and videos. It’ll handle it all.

No sources? Just give it a topic. It’ll suggest material, and you decide what to include.

Use the chat panel to ask questions like you would with any conversational AI tool. But here, every answer comes from your selected sources, not web data or model training data.

Try out the pre-built outputs. Want a summary? It’ll do that. Need a timeline across 18 sources? Done. A structured FAQ, a study guide, a mind map? All there. You can even save any answers you like into the Notes area for quick access later.

Now for the impressive features. Every answer includes citations. Not vague "based on your content" citations, but clickable references showing exactly which sentence from your source it pulled from. If you’ve ever tried to fact-check ChatGPT, this is a dream.

It can even turn your content into a podcast: a fake chat between two AI voices, complete with ums, you knows, and awkward pauses that weirdly make it feel real. No mic, no editing, no studio. Just click. Google says it’s experimental. It’s already scarily good.

Overall, if your job involves reading a lot, writing a lot, or making sense of piles of content, NotebookLM is worth trying. It’s free to start, works on your browser or phone, and just needs a Google account. It’s like having a focused, grounded, slightly nerdy assistant that quietly gets things done.

We fed it a bunch of cybersecurity articles to generate sample outputs. The mind map? Sharp. The FAQ? On point. The timeline? Clean and detailed. And the podcast? Honestly, spooky good.

Want to see what it produced? See our trial use case on cybersecurity here.

As always, do share your feedback and questions as responses to this email or in the comments section. See you in a fortnight.

Cheers!

Woah! Notebook sounds awesome.

Search is eating the news, Shadow AI is eating your compliance policy, and NotebookLM is eating my weekend